All In One Digital Human Video Generator

Create your AI Avatar with just an image, a voice clip, and a video!

All In One Digital Human Video Generator

Create your AI Avatar with just an image, a voice clip, and a video!

AI Avatar - Create Digital Human with Perfect Lip Sync

Generate stunning Digital Humans with our advanced OmniAvatar AI technology. From Asian actresses to European celebrities, from K-pop idols to fashion icons - create your unique virtual identity with perfect lip sync technology powered by OmniAvatar.

Asian Actress

Ultra-realistic rendering

European Celebrity

Fashion-forward style

K-pop Idol

Flawless makeup & style

South Asian Man

Professional style

Ultra-Realistic Digital Human Rendering

Advanced OmniAvatar AI technology creates lifelike Digital Humans with perfect lip sync and natural expressions.

Try NowDiverse Digital Human Styles

From Asian actresses to European celebrities, K-pop idols to fashion icons - create any Digital Human style with OmniHuman.

Explore StylesInstant OmniHuman Generation

Create professional Digital Humans with perfect lip sync in minutes using our OmniHuman AI technology.

Generate NowZero Learning Curve

No design skills required. Anyone can create stunning Digital Humans with perfect lip sync using OmniHuman.

Get StartedReady to Create Your AI Avatar?

Experience the power of our OmniAvatar AI technology and create stunning virtual characters in minutes.

Start Creating AI Avatar* All virtual characters shown above are generated using our OmniAvatar AI technology, showcasing diverse styles from Asian actresses to European celebrities and K-pop idols.

OmniAvatar Digital Human with Perfect Lip Sync

Transform your static images into dynamic Digital Humans with perfect lip sync technology. Upload a photo and audio, then watch as OmniAvatar AI creates a realistic talking Digital Human with natural expressions and movements.

Cheerful Young Woman

Natural expressions with perfect lip sync

Cyberpunk Streamer

Futuristic style with dynamic movements

Gaming Streamer

Energetic personality with perfect sync

Sports Host

Professional presentation with clear articulation

Source Image + Audio = Digital Human with Lip Sync

OmniAvatar AI technology perfectly synchronizes lip movements to create realistic talking Digital Humans

Perfect For Digital Human Creation

Content Creators

Create virtual Digital Human hosts for videos, live streams, and digital content with perfect lip sync and natural expressions using OmniHuman.

Brand Marketing

Design Digital Human brand ambassadors and virtual spokespersons for campaigns with professional presentation and clear articulation powered by OmniHuman lip sync technology.

Social Media

Build unique Digital Human identities for social media presence with energetic personality, dynamic movements, and perfect lip sync using OmniAvatar technology.

Ready to Create Your Digital Human with Perfect Lip Sync?

Experience the power of OmniHuman AI technology and create stunning Digital Human videos with realistic movements, expressions, and perfect lip sync.

Start Creating Digital HumansRealistic Digital Human Movements

Natural body language and facial expressions with perfect lip sync

Perfect Lip Sync Technology

Accurate audio-video synchronization powered by OmniHuman AI

Fast Digital Human Generation

Quick Digital Human video creation in minutes using OmniHuman

Virtual Human - Create Dream Virtual Idols with OmniHuman

Use advanced OmniHuman virtual human technology to create unique virtual idols. From metal-style girl group rappers to anime-style girl group vocalists, from full-body poses to half-body close-ups, build your dream virtual world with OmniHuman.

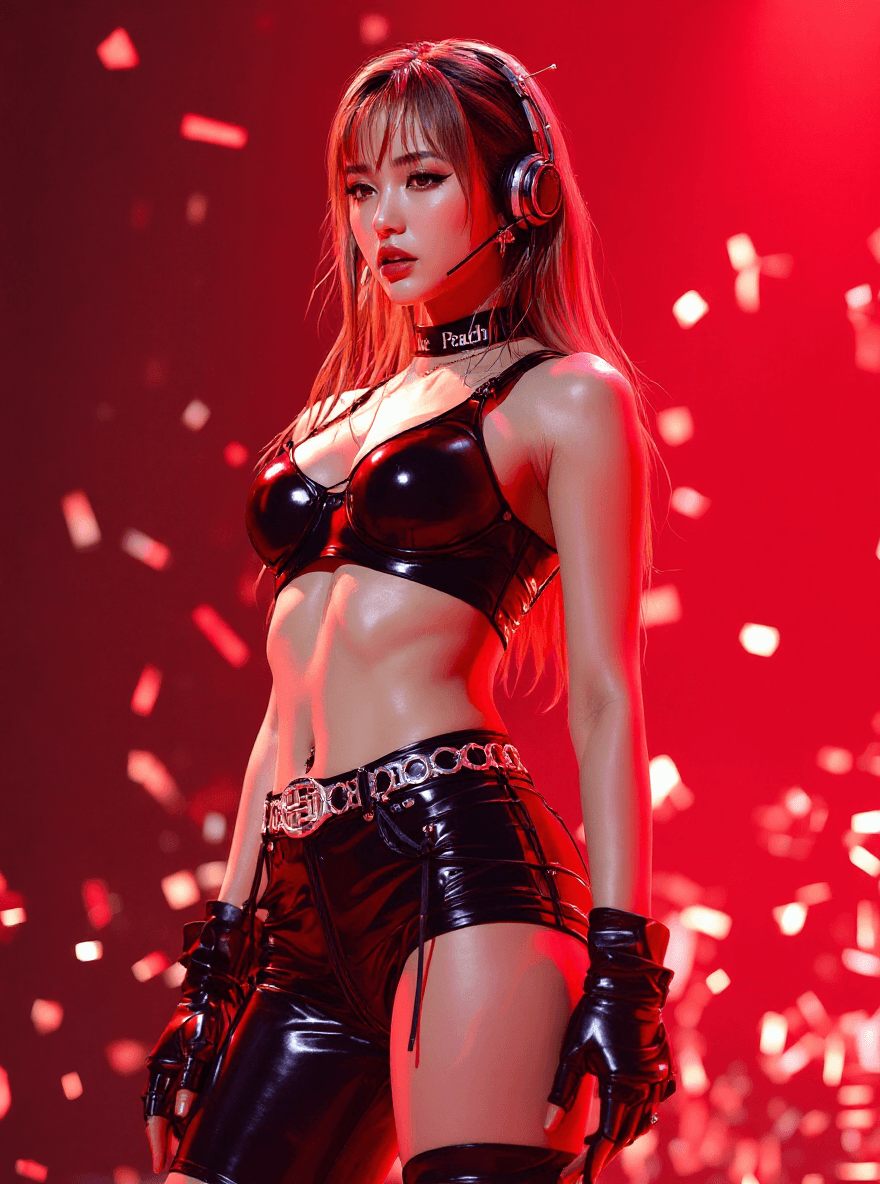

Metal Style Girl Group Rapper

Full body pose showcase

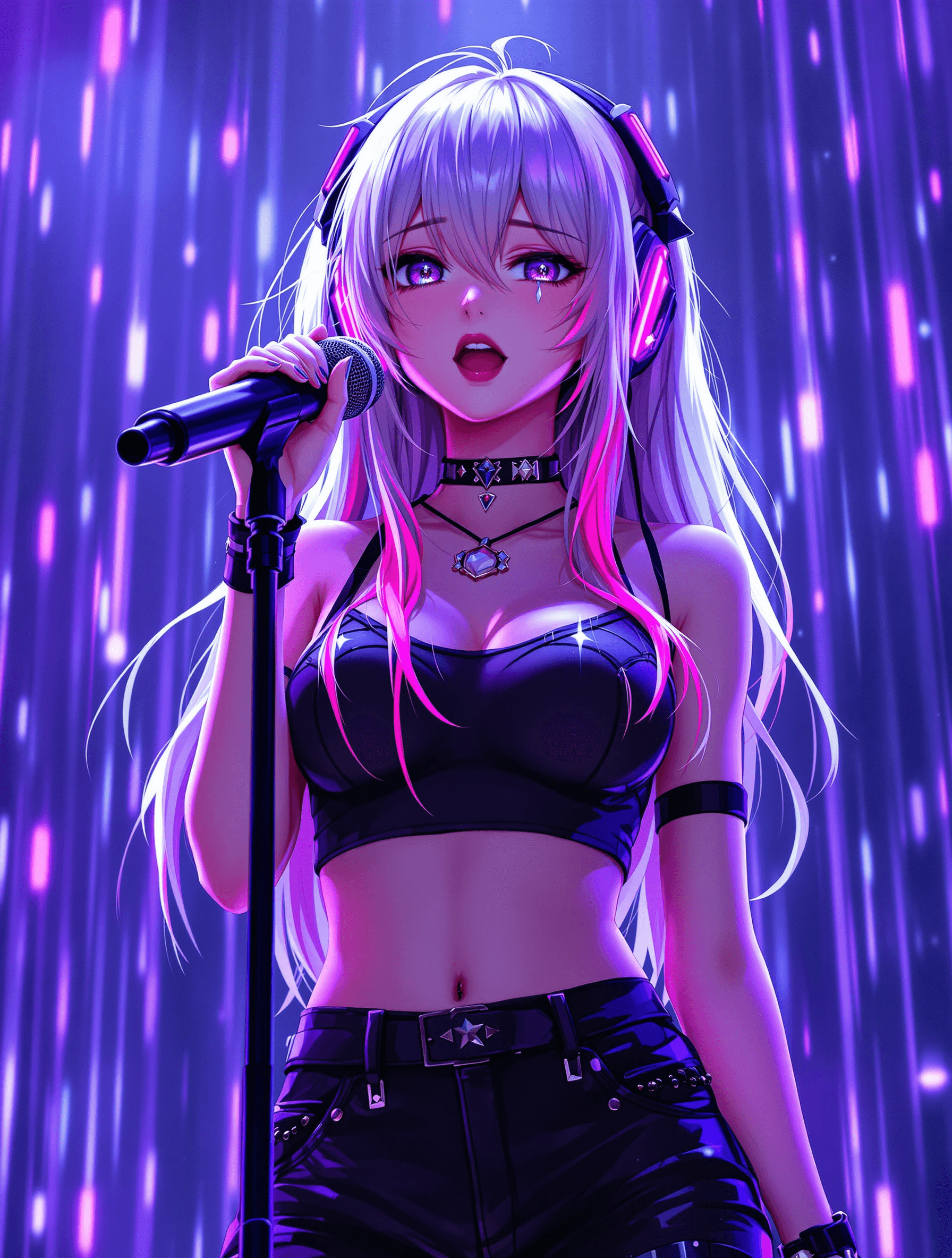

Anime Style Girl Group Vocalist

Half body close-up shot

Multi-angle Virtual Human

Image expansion technology

New Virtual Human Style

Advanced AI generation

Holographic Virtual Human Rendering

Advanced OmniHuman virtual human technology creates dream-like holographic effects, bringing virtual idols to life.

Create HologramDiverse Virtual Styles

From metal style to anime style, from rappers to vocalists, create various virtual idol styles with OmniHuman.

Explore StylesMulti-angle Image Expansion

Advanced OmniHuman image expansion technology generates multi-angle virtual human displays from single angles.

Expand ImagesDream Virtual World

Create your dream virtual world with OmniHuman and let virtual idols freely express themselves in digital space.

Build World